Ted Moskovitz

I'm a member of technical staff working on scaling reinforcement learning at Anthropic. I'm interested in building helpful intelligence with a grounded understanding of the world. Broadly, I'm excited about reasoning, sequential decision-making, and optimization for large-scale models.

Before this, I did my PhD at the Gatsby Computational Neuroscience Unit in London, where I was advised by Maneesh Sahani and Matt Botvinick. I've also interned at DeepMind, where I worked on constrained reinforcement learning, and Uber AI Labs, where I worked on optimization for large-scale deep learning.

I sometimes put notes, code, and other writing here.

Select Publications

Moskovitz T, Singh A, Strouse D, Sandholm T, Salakhutdinov R, Dragan A, McAleer S (2024). Confronting Reward Model Overoptimization with Constrained RLHF. International Conference on Learning Representations (ICLR) (Spotlight, Top 5% of Submissions)

paper | code

Moskovitz T, O'Donoghue B, Veeriah V, Flennerhag S, Singh S, Zahavy T (2023). ReLOAD: Reinforcement Learning with Optimistic Ascent-Descent for Last-Iterate Convergence in Constrained MDPs. International Conference on Machine Learning (ICML). paper | website

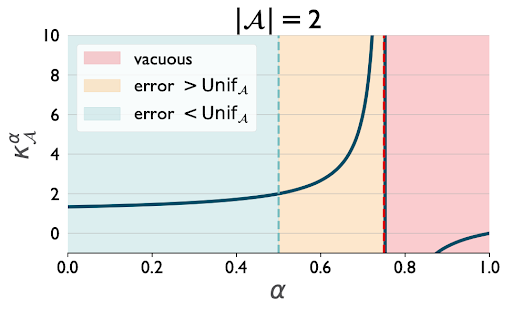

Moskovitz T, Arbel M, Parker-Holder J, Pacchiano A (2022). Towards an Understanding of Default Policies in Multitask Policy Optimization. Conference on Artificial Intelligence and Statistics (AISTATS). (Best Paper Award Honorable Mention)

paper

Moskovitz T, Wilson SR, Sahani M (2022). A First-Occupancy Representation for Reinforcement Learning. International Conference on Learning Representations (ICLR).

paper | code | talk at Cosyne

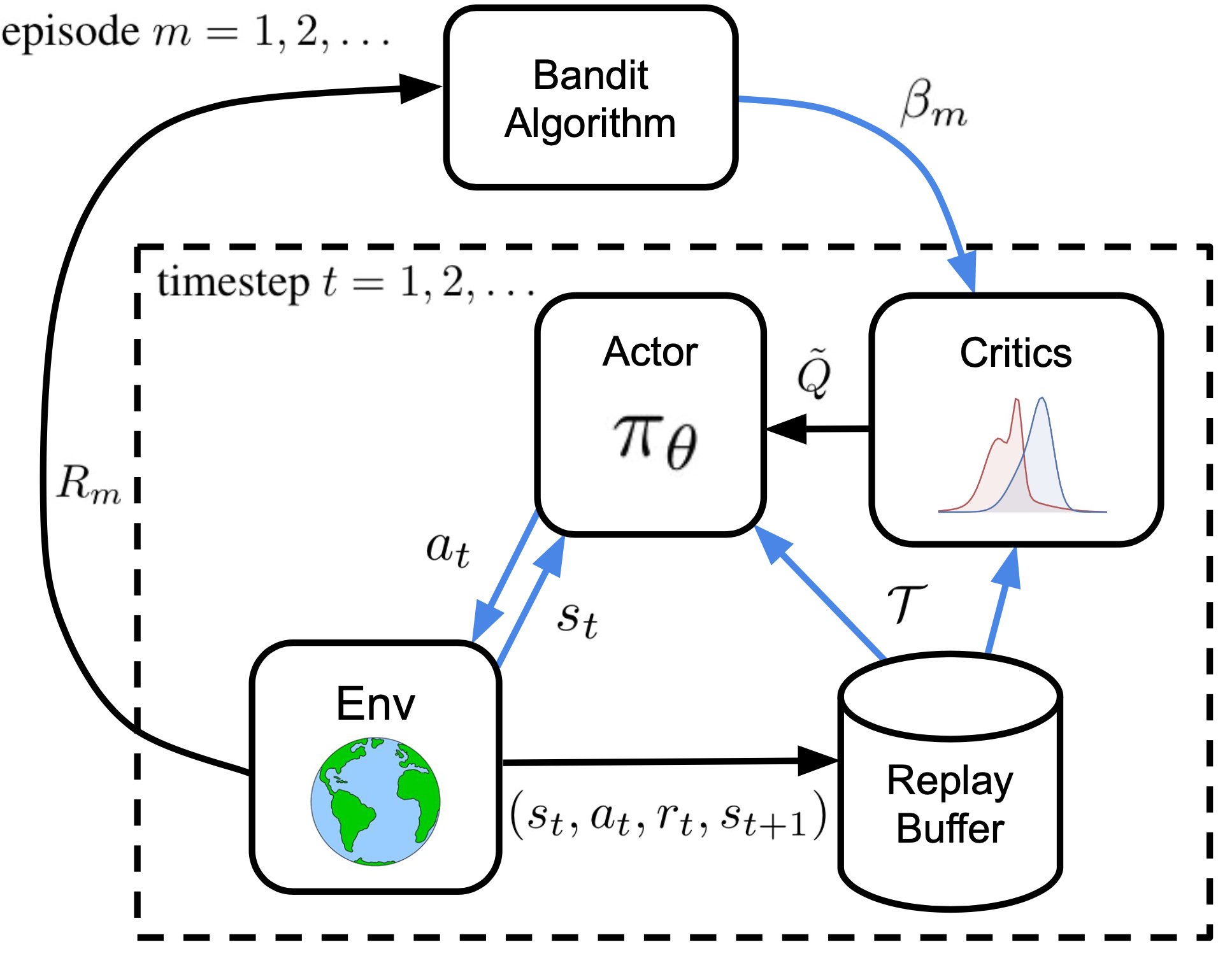

Moskovitz T, Parker-Holder J, Pacchiano A, Arbel M, Jordan MI (2021). Tactical Optimism and Pessimism for Deep Reinforcement Learning. Neural Information Processing Systems (NeurIPS).

paper | code